本文实验环境基于: CentOS Linux release 7.6.1810 (Core)

1. 环境准备

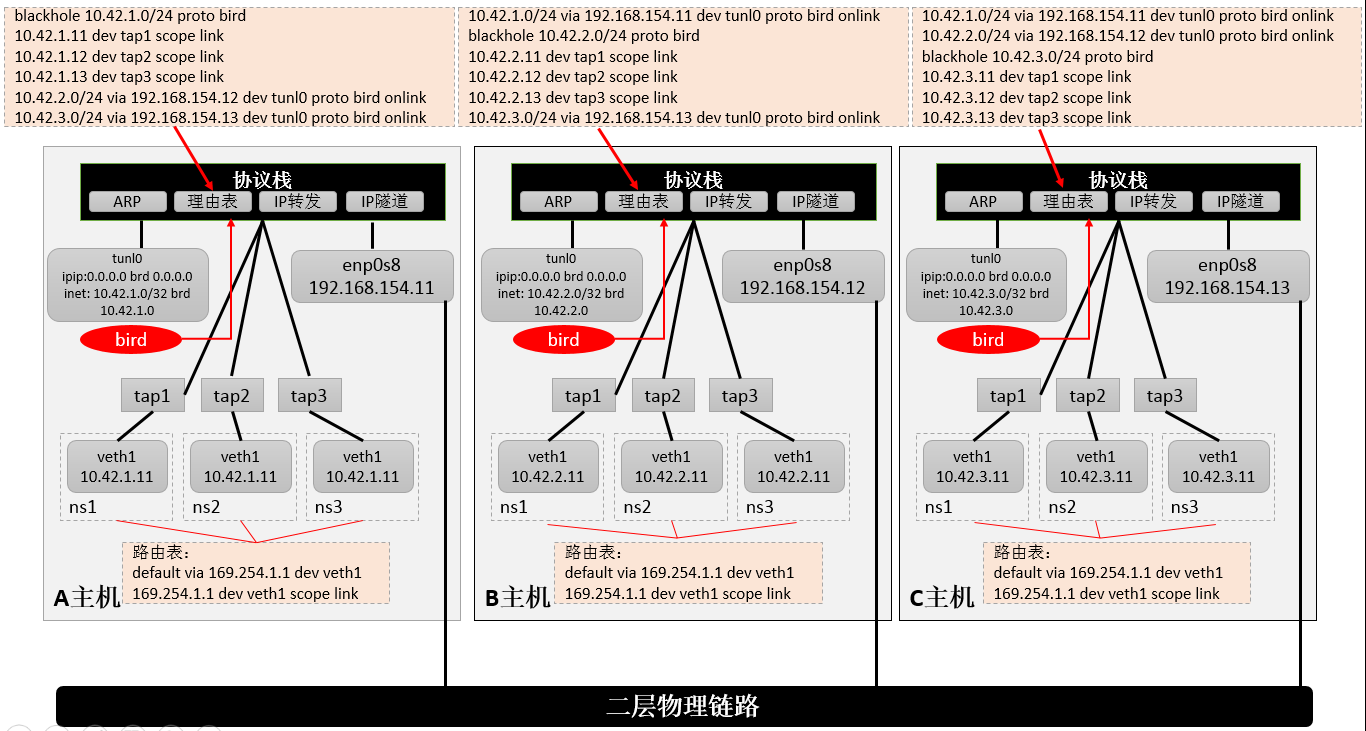

准备好三台主机CentOS 7版本,比如我这里的三台机器IP地址是 A主机(192.168.154.11)、B主机(192.168.154.12)、C主机(192.168.154.13)

1.1 最终部署架构图

1.2 说明

-

- bird的主要作用是生成路由表,通过路由表将数据转发到tunl0设备

-

- 如果是跨主机namespace之间通信:tunl0设备在根据IP隧道协议将数据通过enp0s8网卡转发的其它服务器

-

- 如果是主机内部namespace之间通信:直接走tap1、tap2、tap3的路由

-

- namespaces的默认路由网关168.254.1.1的实现原理参考inux ipip隧道技术测试二,模拟calico网络(三主机、单网卡、多namespace)总的1.4章节说明

-

- ★★ bird在calico中只是起到一个管理路由表的作用,比如192.168.154.11服务器要连接到192.168.154.12的10.42.2.0/24网络,就bird会根据配置自动生产一条路由

10.42.2.0/24 via 192.168.154.12 dev tunl0 proto bird onlink,这样IP隧道协议就会根据这条路由把网络数据包转发过去了。当路由表保存成功后,停止bird服务对主机之间namespace的之间的网络连通性无影响,bird创建好路由表之后,主机之间的网络就由linux的网络内核(如:IP隧道、路由等)去处理了。

- ★★ bird在calico中只是起到一个管理路由表的作用,比如192.168.154.11服务器要连接到192.168.154.12的10.42.2.0/24网络,就bird会根据配置自动生产一条路由

2. 下载并安装Calico维护的BIRD版本

2.1 下载

这里我们从calico镜像中去拷贝,因此需要找一个安装了docker的机器,先下载calico/node:v3.11.1镜像

docker pull calico/node:v3.11.1

然后启动一个零时的calico/node:v3.11.1容器,再从容器中将bird、bird6、birdcl拷贝出来:

docker run --name calico-temp -d calico/node:v3.11.1 sleep 200

docker cp calico-temp:/usr/bin/bird ./

docker cp calico-temp:/usr/bin/bird6 ./

docker cp calico-temp:/usr/bin/birdcl ./

拷贝完成后可以删除这个临时容器:

docker stop calico-temp

docker rm calico-temp

2.2 安装

拷贝bird、bird6、birdcl到192.168.154.11、192.168.154.12、192.168.154.13三台服务器的/usr/local/sbin/目录下,并赋予可执行权限

chmod +x /usr/local/sbin/bird*

3. 配置BIRD

3.1 服务器192.168.154.11配置

mkdir /etc/bird-cfg/

cat > /etc/bird-cfg/bird.cfg << EOL

protocol static {

# IP blocks for this host.

route 10.42.1.0/24 blackhole;

}

# Aggregation of routes on this host; export the block, nothing beneath it.

function calico_aggr ()

{

# Block 10.42.1.0/24 is confirmed

if ( net = 10.42.1.0/24 ) then { accept; }

if ( net ~ 10.42.1.0/24 ) then { reject; }

}

filter calico_export_to_bgp_peers {

calico_aggr();

if ( net ~ 10.42.0.0/16 ) then {

accept;

}

reject;

}

filter calico_kernel_programming {

if ( net ~ 10.42.0.0/16 ) then {

krt_tunnel = "tunl0";

accept;

}

accept;

}

router id 192.168.154.11;

# Configure synchronization between routing tables and kernel.

protocol kernel {

learn; # Learn all alien routes from the kernel

persist; # Don't remove routes on bird shutdown

scan time 2; # Scan kernel routing table every 2 seconds

import all;

export filter calico_kernel_programming; # Default is export none

graceful restart; # Turn on graceful restart to reduce potential flaps in

# routes when reloading BIRD configuration. With a full

# automatic mesh, there is no way to prevent BGP from

# flapping since multiple nodes update their BGP

# configuration at the same time, GR is not guaranteed to

# work correctly in this scenario.

}

# Watch interface up/down events.

protocol device {

debug all;

scan time 2; # Scan interfaces every 2 seconds

}

protocol direct {

debug all;

interface -"tap*", "*"; # Exclude tap* but include everything else.

}

# Template for all BGP clients

template bgp bgp_template {

debug all;

description "Connection to BGP peer";

local as 64512;

multihop;

gateway recursive; # This should be the default, but just in case.

import all; # Import all routes, since we don't know what the upstream

# topology is and therefore have to trust the ToR/RR.

export filter calico_export_to_bgp_peers; # Only want to export routes for workloads.

source address 192.168.154.11; # The local address we use for the TCP connection

add paths on;

graceful restart; # See comment in kernel section about graceful restart.

connect delay time 2;

connect retry time 5;

error wait time 5,30;

}

protocol bgp Mesh_192_168_154_12 from bgp_template {

neighbor 192.168.154.12 as 64512;

}

protocol bgp Mesh_192_168_154_13 from bgp_template {

neighbor 192.168.154.13 as 64512;

#passive on; # Mesh is unidirectional, peer will connect to us.

}

EOL

3.2 服务器192.168.154.12配置

mkdir /etc/bird-cfg/

cat > /etc/bird-cfg/bird.cfg << EOL

protocol static {

# IP blocks for this host.

route 10.42.2.0/24 blackhole;

}

# Aggregation of routes on this host; export the block, nothing beneath it.

function calico_aggr ()

{

# Block 10.42.2.0/24 is confirmed

if ( net = 10.42.2.0/24 ) then { accept; }

if ( net ~ 10.42.2.0/24 ) then { reject; }

}

filter calico_export_to_bgp_peers {

calico_aggr();

if ( net ~ 10.42.0.0/16 ) then {

accept;

}

reject;

}

filter calico_kernel_programming {

if ( net ~ 10.42.0.0/16 ) then {

krt_tunnel = "tunl0";

accept;

}

accept;

}

router id 192.168.154.12;

# Configure synchronization between routing tables and kernel.

protocol kernel {

learn; # Learn all alien routes from the kernel

persist; # Don't remove routes on bird shutdown

scan time 2; # Scan kernel routing table every 2 seconds

import all;

export filter calico_kernel_programming; # Default is export none

graceful restart; # Turn on graceful restart to reduce potential flaps in

# routes when reloading BIRD configuration. With a full

# automatic mesh, there is no way to prevent BGP from

# flapping since multiple nodes update their BGP

# configuration at the same time, GR is not guaranteed to

# work correctly in this scenario.

}

# Watch interface up/down events.

protocol device {

debug all;

scan time 2; # Scan interfaces every 2 seconds

}

protocol direct {

debug all;

interface -"tap*", "*"; # Exclude tap* but include everything else.

}

# Template for all BGP clients

template bgp bgp_template {

debug all;

description "Connection to BGP peer";

local as 64512;

multihop;

gateway recursive; # This should be the default, but just in case.

import all; # Import all routes, since we don't know what the upstream

# topology is and therefore have to trust the ToR/RR.

export filter calico_export_to_bgp_peers; # Only want to export routes for workloads.

source address 192.168.154.12; # The local address we use for the TCP connection

add paths on;

graceful restart; # See comment in kernel section about graceful restart.

connect delay time 2;

connect retry time 5;

error wait time 5,30;

}

# For peer /host/ipvs-master1/ip_addr_v4

protocol bgp Mesh_192_168_154_11 from bgp_template {

neighbor 192.168.154.11 as 64512;

}

protocol bgp Mesh_192_168_154_13 from bgp_template {

neighbor 192.168.154.13 as 64512;

#passive on; # Mesh is unidirectional, peer will connect to us.

}

EOL

3.3 服务器192.168.154.13配置

mkdir /etc/bird-cfg/

cat > /etc/bird-cfg/bird.cfg << EOL

protocol static {

# IP blocks for this host.

route 10.42.3.0/24 blackhole;

}

# Aggregation of routes on this host; export the block, nothing beneath it.

function calico_aggr ()

{

# Block 10.42.3.0/24 is confirmed

if ( net = 10.42.3.0/24 ) then { accept; }

if ( net ~ 10.42.3.0/24 ) then { reject; }

}

filter calico_export_to_bgp_peers {

calico_aggr();

if ( net ~ 10.42.0.0/16 ) then {

accept;

}

reject;

}

filter calico_kernel_programming {

if ( net ~ 10.42.0.0/16 ) then {

krt_tunnel = "tunl0";

accept;

}

accept;

}

router id 192.168.154.13;

# Configure synchronization between routing tables and kernel.

protocol kernel {

learn; # Learn all alien routes from the kernel

persist; # Don't remove routes on bird shutdown

scan time 2; # Scan kernel routing table every 2 seconds

import all;

export filter calico_kernel_programming; # Default is export none

graceful restart; # Turn on graceful restart to reduce potential flaps in

# routes when reloading BIRD configuration. With a full

# automatic mesh, there is no way to prevent BGP from

# flapping since multiple nodes update their BGP

# configuration at the same time, GR is not guaranteed to

# work correctly in this scenario.

}

# Watch interface up/down events.

protocol device {

debug all;

scan time 2; # Scan interfaces every 2 seconds

}

protocol direct {

debug all;

interface -"tap*", "*"; # Exclude tap* but include everything else.

}

# Template for all BGP clients

template bgp bgp_template {

debug all;

description "Connection to BGP peer";

local as 64512;

multihop;

gateway recursive; # This should be the default, but just in case.

import all; # Import all routes, since we don't know what the upstream

# topology is and therefore have to trust the ToR/RR.

export filter calico_export_to_bgp_peers; # Only want to export routes for workloads.

source address 192.168.154.13; # The local address we use for the TCP connection

add paths on;

graceful restart; # See comment in kernel section about graceful restart.

connect delay time 2;

connect retry time 5;

error wait time 5,30;

}

protocol bgp Mesh_192_168_154_11 from bgp_template {

neighbor 192.168.154.11 as 64512;

}

protocol bgp Mesh_192_168_154_12 from bgp_template {

neighbor 192.168.154.12 as 64512;

#passive on; # Mesh is unidirectional, peer will connect to us.

}

EOL

4. 启动BIRD

分别在三台服务器执行启动命令:

bird -R -s /var/run/bird.ctl -d -c /etc/bird-cfg/bird.cfg

birdcl控制台,可以查看bird的路由、协议配置等:

birdcl -s /var/run/bird.ctl

> show protocols

> show routes

详细官网: https://bird.network.cz/?get_doc&v=16&f=bird-4.html

5. 配置测试网络

5.1 服务器192.168.154.11网络配置

大部分命令在inux ipip隧道技术测试二,模拟calico网络(三主机、单网卡、多namespace)中的【2.2章节】和【4.2章节】有都有,因此命令说明可以参考其中的注释。网络流向的原理和【4.2章节】一致,配置命令和【4.2章节】主要区别是少了ip route add 10.42.2.0/24 via 192.168.154.12 dev tun0 onlink和p route add 10.42.3.0/24 via 192.168.154.13 dev tun0 onlink这两行命令。应为bird会自动生成路由到邻居节点namespace的路由表。如:10.42.2.0/24 via 192.168.154.12 dev tunl0 proto bird onlink。

具体配置命令如下:

cat > /etc/sysctl.d/30-ipforward.conf<<EOL

net.ipv4.ip_forward=1

net.ipv6.conf.default.forwarding=1

net.ipv6.conf.all.forwarding=1

EOL

sysctl -p /etc/sysctl.d/30-ipforward.conf

ip netns add ns1

ip netns add ns2

ip netns add ns3

ip link add tap1 type veth peer name veth1 netns ns1

ip link add tap2 type veth peer name veth1 netns ns2

ip link add tap3 type veth peer name veth1 netns ns3

ip l set address ee:ee:ee:ee:ee:ee dev tap1

ip l set address ee:ee:ee:ee:ee:ee dev tap2

ip l set address ee:ee:ee:ee:ee:ee dev tap3

echo 1 > /proc/sys/net/ipv4/conf/tap1/proxy_arp

echo 1 > /proc/sys/net/ipv4/conf/tap2/proxy_arp

echo 1 > /proc/sys/net/ipv4/conf/tap3/proxy_arp

ip link set tap1 up

ip link set tap2 up

ip link set tap3 up

ip r a 10.42.1.11 dev tap1

ip r a 10.42.1.12 dev tap2

ip r a 10.42.1.13 dev tap3

ip netns exec ns1 ip addr add 10.42.1.11/32 dev veth1

ip netns exec ns2 ip addr add 10.42.1.12/32 dev veth1

ip netns exec ns3 ip addr add 10.42.1.13/32 dev veth1

ip netns exec ns1 ip link set veth1 up

ip netns exec ns2 ip link set veth1 up

ip netns exec ns3 ip link set veth1 up

ip netns exec ns1 ip link set lo up

ip netns exec ns2 ip link set lo up

ip netns exec ns3 ip link set lo up

ip netns exec ns1 ip route add 169.254.1.1 dev veth1

ip netns exec ns2 ip route add 169.254.1.1 dev veth1

ip netns exec ns3 ip route add 169.254.1.1 dev veth1

ip netns exec ns1 ip route add default via 169.254.1.1 dev veth1

ip netns exec ns2 ip route add default via 169.254.1.1 dev veth1

ip netns exec ns3 ip route add default via 169.254.1.1 dev veth1

ip netns exec ns1 ip neigh add 169.254.1.1 dev veth1 lladdr ee:ee:ee:ee:ee:ee

ip netns exec ns2 ip neigh add 169.254.1.1 dev veth1 lladdr ee:ee:ee:ee:ee:ee

ip netns exec ns3 ip neigh add 169.254.1.1 dev veth1 lladdr ee:ee:ee:ee:ee:ee

modprobe ipip

ip a a 10.42.1.0/32 brd 10.42.1.0 dev tunl0

ip link set tunl0 up

iptables -F

5.2 服务器192.168.154.12网络配置

cat > /etc/sysctl.d/30-ipforward.conf<<EOL

net.ipv4.ip_forward=1

net.ipv6.conf.default.forwarding=1

net.ipv6.conf.all.forwarding=1

EOL

sysctl -p /etc/sysctl.d/30-ipforward.conf

ip netns add ns1

ip netns add ns2

ip netns add ns3

ip link add tap1 type veth peer name veth1 netns ns1

ip link add tap2 type veth peer name veth1 netns ns2

ip link add tap3 type veth peer name veth1 netns ns3

ip l set address ee:ee:ee:ee:ee:ee dev tap1

ip l set address ee:ee:ee:ee:ee:ee dev tap2

ip l set address ee:ee:ee:ee:ee:ee dev tap3

echo 1 > /proc/sys/net/ipv4/conf/tap1/proxy_arp

echo 1 > /proc/sys/net/ipv4/conf/tap2/proxy_arp

echo 1 > /proc/sys/net/ipv4/conf/tap3/proxy_arp

ip link set tap1 up

ip link set tap2 up

ip link set tap3 up

ip r a 10.42.2.11 dev tap1

ip r a 10.42.2.12 dev tap2

ip r a 10.42.2.13 dev tap3

ip netns exec ns1 ip addr add 10.42.2.11/32 dev veth1

ip netns exec ns2 ip addr add 10.42.2.12/32 dev veth1

ip netns exec ns3 ip addr add 10.42.2.13/32 dev veth1

ip netns exec ns1 ip link set veth1 up

ip netns exec ns2 ip link set veth1 up

ip netns exec ns3 ip link set veth1 up

ip netns exec ns1 ip link set lo up

ip netns exec ns2 ip link set lo up

ip netns exec ns3 ip link set lo up

ip netns exec ns1 ip route add 169.254.1.1 dev veth1

ip netns exec ns2 ip route add 169.254.1.1 dev veth1

ip netns exec ns3 ip route add 169.254.1.1 dev veth1

ip netns exec ns1 ip route add default via 169.254.1.1 dev veth1

ip netns exec ns2 ip route add default via 169.254.1.1 dev veth1

ip netns exec ns3 ip route add default via 169.254.1.1 dev veth1

ip netns exec ns1 ip neigh add 169.254.1.1 dev veth1 lladdr ee:ee:ee:ee:ee:ee

ip netns exec ns2 ip neigh add 169.254.1.1 dev veth1 lladdr ee:ee:ee:ee:ee:ee

ip netns exec ns3 ip neigh add 169.254.1.1 dev veth1 lladdr ee:ee:ee:ee:ee:ee

modprobe ipip

ip a a 10.42.2.0/32 brd 10.42.2.0 dev tunl0

ip link set tunl0 up

iptables -F

5.3 服务器192.168.154.13网络配置

cat > /etc/sysctl.d/30-ipforward.conf<<EOL

net.ipv4.ip_forward=1

net.ipv6.conf.default.forwarding=1

net.ipv6.conf.all.forwarding=1

EOL

sysctl -p /etc/sysctl.d/30-ipforward.conf

ip netns add ns1

ip netns add ns2

ip netns add ns3

ip link add tap1 type veth peer name veth1 netns ns1

ip link add tap2 type veth peer name veth1 netns ns2

ip link add tap3 type veth peer name veth1 netns ns3

ip l set address ee:ee:ee:ee:ee:ee dev tap1

ip l set address ee:ee:ee:ee:ee:ee dev tap2

ip l set address ee:ee:ee:ee:ee:ee dev tap3

echo 1 > /proc/sys/net/ipv4/conf/tap1/proxy_arp

echo 1 > /proc/sys/net/ipv4/conf/tap2/proxy_arp

echo 1 > /proc/sys/net/ipv4/conf/tap3/proxy_arp

ip link set tap1 up

ip link set tap2 up

ip link set tap3 up

ip r a 10.42.3.11 dev tap1

ip r a 10.42.3.12 dev tap2

ip r a 10.42.3.13 dev tap3

ip netns exec ns1 ip addr add 10.42.3.11/32 dev veth1

ip netns exec ns2 ip addr add 10.42.3.12/32 dev veth1

ip netns exec ns3 ip addr add 10.42.3.13/32 dev veth1

ip netns exec ns1 ip link set veth1 up

ip netns exec ns2 ip link set veth1 up

ip netns exec ns3 ip link set veth1 up

ip netns exec ns1 ip link set lo up

ip netns exec ns2 ip link set lo up

ip netns exec ns3 ip link set lo up

ip netns exec ns1 ip route add 169.254.1.1 dev veth1

ip netns exec ns2 ip route add 169.254.1.1 dev veth1

ip netns exec ns3 ip route add 169.254.1.1 dev veth1

ip netns exec ns1 ip route add default via 169.254.1.1 dev veth1

ip netns exec ns2 ip route add default via 169.254.1.1 dev veth1

ip netns exec ns3 ip route add default via 169.254.1.1 dev veth1

ip netns exec ns1 ip neigh add 169.254.1.1 dev veth1 lladdr ee:ee:ee:ee:ee:ee

ip netns exec ns2 ip neigh add 169.254.1.1 dev veth1 lladdr ee:ee:ee:ee:ee:ee

ip netns exec ns3 ip neigh add 169.254.1.1 dev veth1 lladdr ee:ee:ee:ee:ee:ee

modprobe ipip

ip a a 10.42.3.0/32 brd 10.42.3.0 dev tunl0

ip link set tunl0 up

iptables -F