参考: https://www.cnblogs.com/goldsunshine/p/10701242.html

1. 安装

1.1 安装k8s

安装k8s 1.16 或1.17 版本,安装网络插件参考后续步骤

1.2 下载calico.yaml配置

curl -O https://docs.projectcalico.org/v3.8/manifests/calico.yaml

经测试,v3.8到v3.11都可以用

根据你的本地的网络环境配置k8s的CIRD,如果你的服务器的IP网段为192.168,那你就可以把k8s的CIDR网段配置为10.244,。

sed -i 's/192.168.0.0/10.244.0.0/g' calico.yaml

2. 以IPIP模式安装

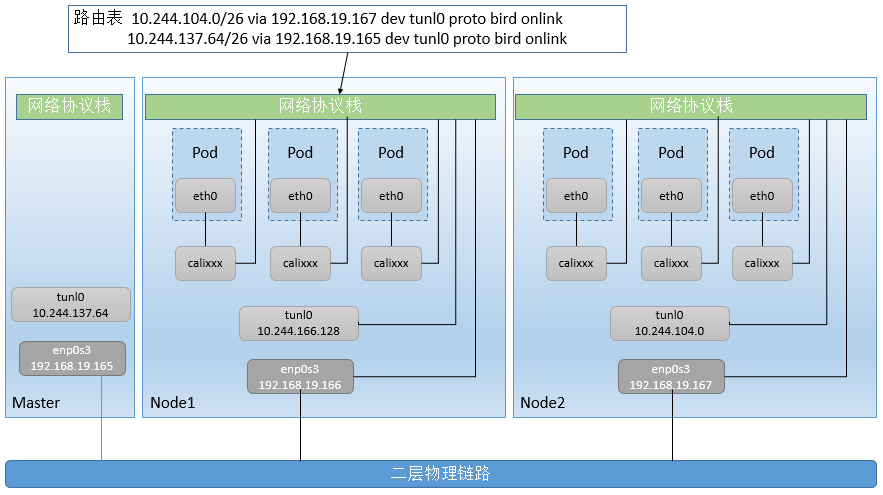

ipip模式: 在每台node主机创建一个tunl0网口,这个隧道链接所有的node容器网络,官网推荐不同的ip网段适合,比如aws的不同区域主机

下载的calico.yaml默认配置为IPIP模式,所以直接用下载的calico.yaml配置安装即可:

kubectl create -f calico.yaml

安装完成后的网络架构图:

2.1 192.168.19.165服务器网络及路由

网络

4: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 10.244.137.64/32 brd 10.244.137.64 scope global tunl0

valid_lft forever preferred_lft forever

路由表

10.244.104.0/26 via 192.168.19.167 dev tunl0 proto bird onlink

10.244.166.128/26 via 192.168.19.166 dev tunl0 proto bird onlink

2.1 192.168.19.166服务器网络及路由

网络

4: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 10.244.166.128/32 brd 10.244.166.128 scope global tunl0

valid_lft forever preferred_lft forever

路由表

10.244.104.0/26 via 192.168.19.167 dev tunl0 proto bird onlink

10.244.137.64/26 via 192.168.19.165 dev tunl0 proto bird onlink

2.1 192.168.19.167服务器网络及路由

网络

4: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 10.244.104.0/32 brd 10.244.104.0 scope global tunl0

valid_lft forever preferred_lft forever

路由表

10.244.137.64/26 via 192.168.19.165 dev tunl0 proto bird onlink

10.244.166.128/26 via 192.168.19.166 dev tunl0 proto bird onlink

2.3 测试

2.3.1 创建一个pod测试

kubectl run busybox --image=busybox --generator=run-pod/v1 --command -- sleep 36000m

kubectl run busybox1 --image=busybox --generator=run-pod/v1 --command -- sleep 36000m

kubectl run busybox2 --image=busybox --generator=run-pod/v1 --command -- sleep 36000m

kubectl run busybox3 --image=busybox --generator=run-pod/v1 --command -- sleep 36000m

kubectl run busybox4 --image=busybox --generator=run-pod/v1 --command -- sleep 36000m

查看pod信息

# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox1 1/1 Running 0 48m 10.244.166.129 node1 <none> <none>

busybox3 1/1 Running 0 48m 10.244.104.1 node2 <none> <none>

# 这里就只列了两个

2.3.2 ping pod的网络测试

发现busybox1部署在node1节点,

用kubectl exec进到busybox1 pod,执行ping 10.244.104.1。

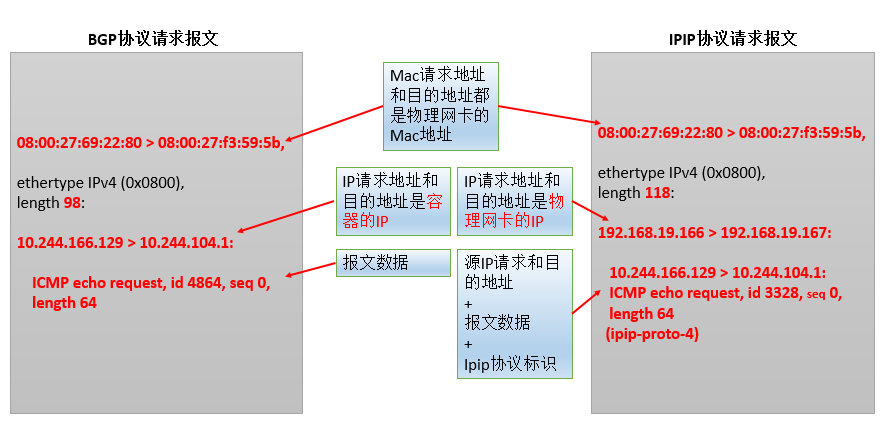

在node1、node2服务器的网卡上抓包,可以发现包中包含了IP隧道封包的包头:

# tcpdump -i enp0s3 -ne 'host not 192.168.19.201'|grep 10.244

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

16:00:45.800954 08:00:27:69:22:80 > 08:00:27:f3:59:5b, ethertype IPv4 (0x0800), length 118: 192.168.19.166 > 192.168.19.167: 10.244.166.129 > 10.244.104.1: ICMP echo request, id 3328, seq 0, length 64 (ipip-proto-4)

16:00:45.801414 08:00:27:f3:59:5b > 08:00:27:69:22:80, ethertype IPv4 (0x0800), length 118: 192.168.19.167 > 192.168.19.166: 10.244.104.1 > 10.244.166.129: ICMP echo reply, id 3328, seq 0, length 64 (ipip-proto-4)

2.4 数据包的网络流向

参考我相关的文章:

-

-

Linux ipip隧道技术测试二,模拟calico网络(三主机、单网卡、多namespace)中的

4.6 数据流向分析和2.6 数据流向分析

3. 以BGP模式安装★★★

BGP模式: 它会以daemonset方式安装在所有node主机,每台主机启动一个bird(BGP client),它会将calico网络内的所有node分配的ip段告知集群内的主机,并通过本机的默认网关的网卡(如:eth0)转发数据

BGP网络相比较IPIP网络,最大的不同之处就是没有了隧道设备 tunl0。 前面介绍过IPIP网络pod之间的流量发送到 tunl0,然后tunl0发送对端设备。BGP网络中,pod之间的流量直接从网卡发送目的地,减少了tunl0这个环节。

因此,如果k8s集群在同一IP段,建议关闭IPIP模式。这样少一层封包逻辑,效率更高。

3.1 现在清除之前部署的IPIP配置

如果之前没有配置IPIP,可以省略这步

kubectl delete pod busybox

kubectl delete -f calico.yaml

reboot重启三个机器,重启后通过IPIP模式安装的默认的tunl0设备及路由表才会删除,这样才会看到BGP模式与IPIP模式的区别。

3.2 修改配置文件

cp calico.yaml calico-bpg.yaml

再修改calico-bpg.yaml文件。注意3.8版本只需要改CALICO_IPV4POOL_IPIP参数为off,3.9及以上版本还需要增加FELIX_IPINIPENABLED参数为false:

- name: CALICO_IPV4POOL_IPIP #ipip模式关闭

value: "off"

- name: FELIX_IPINIPENABLED #felix关闭ipip

value: "false"

3.3 执行安装

kubectl create -f calico-bpg.yaml

安装完成后的网络架构图:

3.4 测试

3.4.1 多创建几个POD测试

kubectl run busybox --image=busybox --generator=run-pod/v1 --command -- sleep 36000m

kubectl run busybox2 --image=busybox --generator=run-pod/v1 --command -- sleep 36000m

kubectl run busybox3 --image=busybox --generator=run-pod/v1 --command -- sleep 36000m

kubectl run busybox4 --image=busybox --generator=run-pod/v1 --command -- sleep 36000m

kubectl run busybox5 --image=busybox --generator=run-pod/v1 --command -- sleep 36000m

查看pod信息:

# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 0 5m56s 10.244.104.0 node2 <none> <none>

busybox2 1/1 Running 0 4m21s 10.244.104.1 node2 <none> <none>

busybox3 1/1 Running 0 4m21s 10.244.166.129 node1 <none> <none>

busybox4 1/1 Running 0 4m20s 10.244.166.130 node1 <none> <none>

busybox5 1/1 Running 0 2m32s 10.244.104.2 node2 <none> <none>

3.4.2 查看网卡信息

ip a看到没有tunl0设备了

3.4.3 查看三台机器路由

192.168.19.165路由

10.244.104.0/26 via 192.168.19.167 dev enp0s3 proto bird

10.244.166.128/26 via 192.168.19.166 dev enp0s3 proto bird

192.168.19.166路由

10.244.104.0/26 via 192.168.19.167 dev enp0s3 proto bird

10.244.137.64/26 via 192.168.19.165 dev enp0s3 proto bird

192.168.19.167路由

10.244.137.64/26 via 192.168.19.165 dev enp0s3 proto bird

10.244.166.128/26 via 192.168.19.166 dev enp0s3 proto bird

3.4.4 网络抓包测试

node1找个pod上去ping 10.244.104.1 ,这里我们找busybox3

kubectl exec -ti busybox3的 sh

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

3: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1440 qdisc noqueue

link/ether 9a:93:56:97:a9:ac brd ff:ff:ff:ff:ff:ff

inet 10.244.166.129/32 scope global eth0

valid_lft forever preferred_lft forever

/ # ping 10.244.104.1

PING 10.244.104.1 (10.244.104.1): 56 data bytes

64 bytes from 10.244.104.1: seq=0 ttl=62 time=0.538 ms

64 bytes from 10.244.104.1: seq=1 ttl=62 time=0.477 ms

64 bytes from 10.244.104.1: seq=2 ttl=62 time=0.473 ms

64 bytes from 10.244.104.1: seq=3 ttl=62 time=0.497 ms

64 bytes from 10.244.104.1: seq=4 ttl=62 time=0.454 ms

64 bytes from 10.244.104.1: seq=5 ttl=62 time=0.522 ms

通过上面的命令,我们看到busybox3的eth0网卡的IP地址为10.244.166.129,veth pair的设备为if5,又因为busybox3部署在node1,因此我们在node1主机找到id为5的网卡设备(略),这里我们找到的设备为cali0d5af60a6c5,因此我们对cali0d5af60a6c5设备抓包:

# tcpdump -i cali0d5af60a6c5

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on cali0d5af60a6c5, link-type EN10MB (Ethernet), capture size 262144 bytes

17:02:40.084812 IP 10.244.166.129 > 10.244.104.1: ICMP echo request, id 4864, seq 0, length 64

17:02:40.085299 IP 10.244.104.1 > 10.244.166.129: ICMP echo reply, id 4864, seq 0, length 64

17:02:41.085717 IP 10.244.166.129 > 10.244.104.1: ICMP echo request, id 4864, seq 1, length 64

17:02:41.086220 IP 10.244.104.1 > 10.244.166.129: ICMP echo reply, id 4864, seq 1, length 64

17:02:45.105098 ARP, Request who-has 10.244.166.129 tell node1, length 28

17:02:45.105238 ARP, Reply 10.244.166.129 is-at 9a:93:56:97:a9:ac (oui Unknown), length 28

17:02:45.233118 ARP, Request who-has 169.254.1.1 tell 10.244.166.129, length 28

17:02:45.233145 ARP, Reply 169.254.1.1 is-at ee:ee:ee:ee:ee:ee (oui Unknown), length 28

通过上面的抓包发现Ping的请求包源地址:10.244.166.129,目的地址为:10.244.104.1。

同事发送了两个ARP请求who-has 10.244.166.129和who-has 169.254.1.1。

同时在node1的enp0s3(192.168.19.166)网卡上抓包:

tcpdump -i enp0s3 -ne host 10.244.104.1

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

# 这里看到转包的源地址是10.244.166.129(源容器的IP地址),源MAC是08:00:27:69:22:80,这个Mac地址就是 node1(192.168.19.166)主机的mac地址

# 这里看到转包的目的地址是10.244.104.1(目的容器的IP地址),目的MAC是08:00:27:f3:59:5b,这个Mac地址就是 node2(192.168.19.167)主机的mac地址

17:02:40.084882 08:00:27:69:22:80 > 08:00:27:f3:59:5b, ethertype IPv4 (0x0800), length 98: 10.244.166.129 > 10.244.104.1: ICMP echo request, id 4864, seq 0, length 64

17:02:40.085282 08:00:27:f3:59:5b > 08:00:27:69:22:80, ethertype IPv4 (0x0800), length 98: 10.244.104.1 > 10.244.166.129: ICMP echo reply, id 4864, seq 0, length 64

17:02:41.085735 08:00:27:69:22:80 > 08:00:27:f3:59:5b, ethertype IPv4 (0x0800), length 98: 10.244.166.129 > 10.244.104.1: ICMP echo request, id 4864, seq 1, length 64

17:02:41.086194 08:00:27:f3:59:5b > 08:00:27:69:22:80, ethertype IPv4 (0x0800), length 98: 10.244.104.1 > 10.244.166.129: ICMP echo reply, id 4864, seq 1, length 64

-

上面看到转包的源地址是10.244.166.129(源容器的IP地址),源MAC是08:00:27:69:22:80,这个Mac地址就是 node1(192.168.19.166)主机的mac地址

-

上面看到转包的目的地址是10.244.104.1(目的容器的IP地址),目的MAC是08:00:27:f3:59:5b,这个Mac地址就是 node2(192.168.19.167)主机的mac地址

抓包发现没有用IPIP多封装一层包头。

由于路由表为:

10.244.104.0/26 via 192.168.19.167 dev enp0s3 proto bird

因此数据发送给网关192.168.19.167,可以在node2的服务器上看看enp0s3的mac地址就是08:00:27:f3:59:5b

# ip a s enp0s3

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:f3:59:5b brd ff:ff:ff:ff:ff:ff

inet 192.168.19.167/24 brd 192.168.19.255 scope global noprefixroute enp0s3

valid_lft forever preferred_lft forever

inet6 fe80::cbe8:e3eb:213f:40f/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::c865:9303:84a2:7e25/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::4d70:15c7:3281:ada5/64 scope link noprefixroute

valid_lft forever preferred_lft forever

查看node1上enp0s3的mac地址为08:00:27:69:22:80::

# ip a s enp0s3

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:69:22:80 brd ff:ff:ff:ff:ff:ff

inet 192.168.19.166/24 brd 192.168.19.255 scope global noprefixroute enp0s3

valid_lft forever preferred_lft forever

inet6 fe80::cbe8:e3eb:213f:40f/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::c865:9303:84a2:7e25/64 scope link noprefixroute

valid_lft forever preferred_lft forever

与前面抓包的目的地址一致:

17:02:41.085735 08:00:27:69:22:80 > 08:00:27:f3:59:5b, ethertype IPv4 (0x0800), length 98: 10.244.166.129 > 10.244.104.1: ICMP echo request, id 4864, seq 1, length 64

服务器发现要发到10.244.104.1,而10.244.104.0/26 via 192.168.19.167 dev enp0s3 proto bird指定的网关是192.168.19.167,广播找到192.168.19.167的mac地址是08:00:27:f3:59:5b,因此讲数据包的目的mac地址改成08:00:27:f3:59:5b

在node2(192.168.19.167)上抓enp0s3网卡的包:

# tcpdump -i enp0s3 -ne host 10.244.104.1

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

17:02:24.845273 08:00:27:69:22:80 > 08:00:27:f3:59:5b, ethertype IPv4 (0x0800), length 98: 10.244.166.129 > 10.244.104.1: ICMP echo request, id 4864, seq 0, length 64

17:02:24.845441 08:00:27:f3:59:5b > 08:00:27:69:22:80, ethertype IPv4 (0x0800), length 98: 10.244.104.1 > 10.244.166.129: ICMP echo reply, id 4864, seq 0, length 64

17:02:25.846927 08:00:27:69:22:80 > 08:00:27:f3:59:5b, ethertype IPv4 (0x0800), length 98: 10.244.166.129 > 10.244.104.1: ICMP echo request, id 4864, seq 1, length 64

17:02:25.847024 08:00:27:f3:59:5b > 08:00:27:69:22:80, ethertype IPv4 (0x0800), length 98: 10.244.104.1 > 10.244.166.129: ICMP echo reply, id 4864, seq 1, length 64

4. 网络请求抓包报文对比